It’s len(str) in Python. Not str.length.

- 1 Post

- 33 Comments

Is it wrong that I’m stuck trying to figure out what language this is?

Trying to figure out what string.length and print(var) exist in a single language… Not Java, not C# (I’m pretty sure its .Length, not length), certainly not C, C++ or Python, Pascal, Schme or Haskell or Javascript or PHP.

3·8 months ago

3·8 months agoThe Chu Ko Nu was more of a party-trick than a real weapon though. The amount of power behind each bolt was miniscule.

The actual “rapid-fire warbow” the Chinese used was the lol rocket-launcher. (Or really, Koreans did it first, strapping Chinese rockets to a bunch of arrows and lighting all of them at the same time, causing devastating effects on the battlefield). See Hwacha: https://en.wikipedia.org/wiki/Hwacha

Zhuge Liang’s biggest battlefield contribution in practice was probably the popularization of the “Ox Cart”, aka the Wheelbarrow. The Shu’s army could march further since they had such contraptions powering their logistics. Kinda funny to think that things like Wheelbarrows were still the stuff of sci-fi in the year 200 AD, but that’s where technology was in practice.

EDIT: The fact that Zhuge Liang’s lanterns (aka: hot air balloons) got practical usage back then is incredible though.

2·8 months ago

2·8 months agoHonestly, if it doesn’t end with people getting impaled on spikes in front of his castle, I have a tough time believing it was Vlad, lol. Pretty much everything ended with “And then they were impaled to serve as a warning to everyone else” like every damn time.

3·8 months ago

3·8 months agoAlibaba in some ways.

My understanding of Alibaba (and the 1001 Arabian Nights) is that they’re closer to Arabic “Duck Tales”. Fantastical stories more designed to woo children with crazy powers and nearly illogical plot structures. However, 1001 Arabian Nights became absurdly popular in Europe, far more popular than well-respected Arabic Heroes. (Much like how Duck Tales is a children’s story in American culture, but way more popular in Europe for some reason). Or for the American equivalent: us importing young-adult shows from Japan (lots of anime) and the American adults consuming it.

For someone “like King Arthur”, an adventuring Hero that’s well respected in the culture that they’re from (ex: English respect King Arthur and see him as high-culture), Arabic Heroes are closer to Sinbad the Sailor instead, rather than Alibaba, Aladdin, or Scheherazade.

Unfortunately, if an Arabic tale came to Europe in the 1500s to 1800s, it would be called “Arabic Nights”, because the original 1001 Arabian Nights was just so popular, every translator in Europe would basically add it as one of Scheherazade’s sub-stories. So its difficult from a Western / English-speaking lens to see what is, or isn’t, respected high-culture stories.

I’m looking through Wikipedia and have come across Antarah ibn Shaddad, a Guardian of the Nativity (Yes, “that” Nativity, Jesus’s birthplace). Such a hero sounds far more similar to King Arthur as a heroic figure to look up towards. (A lot of 1001 Arabian Nights are filled with rather disgusting and backstabby characters and aren’t really “Heroes”).

12·8 months ago

12·8 months agoIts hard to overstate how “legendary” the names are of Romance of the Three Kingdoms.

The Sun family who controls Wu (one of the Three Kingdoms), is the same Sun family who wrote Sun Tzu’s Art of War.

40·8 months ago

40·8 months agoThere is this legend / history from Romania called Vlad Dracula. He was a Voivod (would be roughly a Count in Western nobility, but with more military powers) who brutally murdered the rich and corrupt Boyars and gave order and safety to the poor.

If you ignored the hundreds of impaled men, women and children in front of his Castles… Legend says he kept gold at the center of his towns to prove that all thieves were dead. If anyone openly stole the gold at the center of town, they’d be impaled.

Perhaps Vlad Dracula was too brutal by Western European standards. But IMO, there seems to be overarching tales of someone who stood up to the corrupt Nobility and actually enacted a sense of justice between both Robin Hood and Vlad.

Obviously, it’s 100% myth by the time people are telling stories of the Count Dracula who drinks your blood. But as a nobleman of the years 1400s or so, his true story is so difficult to separate out from the myths and legends. Whoever was for real, he was clearly brutal to have caused so many myths to be written about him.

Going further East, there are the many Tales of Baba Yaga. A powerful and brutal witch of Siberia. There’s all kinds of stories of Baba Yaga, but she usually has Twins or Triplets form, a Dancing Hut and powerful and brutal (but ironically fair) Magicks.

I wouldn’t say that Baba Yaga is like King Arthur… But Baba Yaga very similar to the evil and brutal Morgana of Arthurian lore. But Baba Yaga has no peer or equal. There is no King Arthur or other set of knights to save society from Baba Yaga wrath.

Even further East are the Fables from the Romance of the Three Kingdoms of China.

The TL;DR is that China had a massive civil war at the fall of the Han Empire in the year 200AD or so. This Civil War lasted three generations.

As the Han Emperor was stolen by the evil Dung Zhou, the 12-way Coalition army tried to save the emperor. It was too late however, China fell into a war and the 12 warlords soon entered a period of free-for-all, vying to control all of China.

The armies kill and or subsume each other until the rise of Shu, Wu and Wei. The ‘winners’ of that period of chaos. And then the real crazy shit starts happening.

They utilized Magicks to create battlefield conditions: unlikely wind that spread fires through enemy camps. They find legendary weapons. Single men fight against armies of a thousand or more.

This crazy Wizard/Inventor named Zhuge Liang invented hot air balloons and used them as communication between troop formations. No wait, this one is actually true and not a legend.

Lots of Chinese Magic and History here as the three-way free for all causes a natural set of alliance (Shu and Wu were weak early on) but then later when Shu grew more powerful, Wu and Wei staged a careful betrayal killing the God of War: Guan Yu (one of the main generals of the Shu. This is “That long-beard Guy riding the Red Horse” you keep seeing in every Chinese Restaraunt)

Romance of the Three Kingdoms is somewhere between King Arthur and the Bible in terms of importance to Chinese Culture. Even modern Chinese understand that whoever wrote the book was a Liu Bei fanboy (aka: obviously biased / favors Shu in every situation). But the book is incredibly influential to Chinese Philosophy. Many sayings and parables about the importance of scholarship and science (Zhuge Liang and Sima Yis inventions to change the course of battle), the importance of order and fairness (even the brutal warlord Cao Cao of Wei was well known and well-regarded as a fair king). The importance of recruitment efforts, and other such parables / philosophy regarding how societies can gain advantage over each other. Not just battle, but through economic power, legends, and more.

5·11 months ago

5·11 months agoI had a pretty standard linear-list scan initially. Each time the program started, I’d check the list for some values. The list of course grew each time the program started. I maximized the list size to like 2MB or something (I forget), but it was in the millions and therefore MBs range. I figured it was too small for me to care about optimization.

I was somewhat correct, even when I simulated a full-sized list, the program booted faster than I could react, so I didn’t care.

Later, I wrote some test code that exhaustively tested startup conditions. Instead of just running the startup once, I was running it millions of times. Suddenly I cared about startup speed, so I replaced it with a Hash Table so that my test-code would finish within 10 minutes (instead of taking a projected 3 days to exhaustively test all startup conditions).

Honestly, I’m more impressed at the opposite. This is perhaps one of the few times I’ve actually taken the linear-list and optimized it into a hash table. Almost all other linear-lists I’ve used in the last 10 years of my professional coding life remain just that: a linear scan, with no one caring about performance. I’ve got linear-lists doing some crazy things, even with MBs of data, that no one has ever came back to me and said it needs optimization.

Do not underestimate the power of std::vector. Its probably faster than you expect, even with O(n^2) algorithms all over the place. std::map and std::unordered_map certainly have their uses, but there’s a lot of situations where the std::vector is far, far, far easier to think about, so its my preferred solution rather than preoptimizing to std::map ahead of time.

2·11 months ago

2·11 months agoTo that end, the SAM9x60 is fantastic for widening the space for folks working in that high-end of the spectrum. But until someone or some entity produces a volume run of an SBC or drop-in module utilizing it, I can’t see why a rapid-development project inclined to use an RPi (middle end complexity) would want to incur the effort of doing their own custom design (high end complexity).

I feel like the SAM9x60D1G-I/LZB is close enough to a plug-and-play “compute module”, but you do have a point in that a compute-module isn’t quite a complete computer yet.

TI’s AM335x / Octavo OSD335x chips have a huge advantage from this particular criticism you have. These chips are behind the Beaglebone Black, meaning you have all three (Beaglebone Black / Green as a SBC for beginners, OSD335x SiP for intermediates, and finally the AM3351BZCEA60 (or other specific versions) for full customization.

At $50 for the SBC / Beaglebone Black, its a weaker buy than Rasp. Pi. But the overall environment and knowledge that Beaglebone Black represents a full ecosystem to the “expert” PCB layout (and includes an in-between SiP from Octavo systems) is surely one of the best arguments available.

Microchip obviously isn’t trying to compete against this at all, and I think that’s fine. TI’s got the full scale concept under lockdown. The SAM9x60’s chief advantage is (wtf??!?!) 4-layer prototypes for DDR2 routing. Its clearly a far simpler chip and more robust to work with. Well, that and also absurdly low power usage.

3·11 months ago

3·11 months agoRasp Pi’s power usage, be it the RP2040 or the Rasp. Pi products in general, always have had horrendous power-consumption specs and even worse sleep/idle states.

1·11 months ago

1·11 months agoHow many layers does the Orange Pi Zero pcb have?

Answer: Good luck finding out. That’s not documented. But based off of the layout and what I can see with screenshots, far more than 4 layers.

A schematic alone is kind of worthless. Knowing if a BGA is designed for 6, 8, or 10 layers makes a big difference. Seeing a reference pcb-implementation with exactly that layer count, so the EE knows how to modify the design for themselves is key to customization. There’s all sorts of EMI and trace-length matching that needs to happen to get that CPU to DDR connection up-and-running.

Proving that a 4-layer layout like this exists is a big deal. It means that a relative beginner can work with the SAM9x60’s DDR interface on cheap 4-layer PCBs (though as I said earlier: 6-layers offer more room and is available at OSHPark so I’d recommend a beginner work with 6 instead)

With regards to SAM9x60D1G-I/LZB SOM vs Orange Pi Zero, the SAM9x60D1G-I/LZB SOM provides you with all remaining pins of access… 152 pins… to the SAM9x60. Meaning a full development board with full access to every feature. Its a fundamentally different purpose. The SOM is a learning-tool and development tool for customization.

6·11 months ago

6·11 months agoWell, my self-deprecating humor aside, I’ve of course thought about it more deeply over my research. So I don’t want to sell it too short.

SAM9x60 has a proper GPU (albeit 2D one), full scale Linux, and DDR2 support (easily reaching 64MB, 128MB or beyond of RAM). At $3 for DDR2 chips the cost-efficacy is absurd (https://www.digikey.com/en/products/detail/issi-integrated-silicon-solution-inc/IS43TR16640C-125JBL/11568766), a QSPI 8MBit (1MB) SRAM chip basically costs the same as 1Gbit (128MB) of RAM.

Newhaven Displays offers various 16-bit TFT/LCD screens (https://newhavendisplay.com/tft-displays/standard-displays/) at a variety of price points. Lets take say… 400x300 pixel 16-bit screen for instance. How much RAM do you need for the framebuffer? (I dunno: this one https://newhavendisplay.com/4-3-inch-ips-480x272px-eve2-resistive-tft/ or something close).

Oh right, 400 x 300 x 2-bytes per pixel and we’re already at 240kB, meaning the entire field of MSP430, ATMega328, ARM Cortex-M0 and even ARM Cortex-M4 are dead on the framebuffer alone. Now lets say we have a 10-frames of animation we’d want to play and bam, we’re already well beyond what a $3 QSPI SRAM chip will offer us.

But lets look at one of the brother chips really quick: Microchip’s SAMA5D4. Though more difficult to boot up, this one comes with H.264 decoder. Forget “frames of animation”, this baby straight up supports MP4 videos on a full scale Linux platform.

Well, maybe you want Rasp. Pi to run that, but a Rasp. Pi 4 can hit 6000mW of power consumption, far beyond the means of typical battery packs of the ~3-inch variety. Dropping the power consumption to 300mW (SAMA5D4 + DDR2 RAM) + 300mW (LCD Screen) and suddenly we’re in the realm of AAA batteries.

So we get to the point where I can say: I can build you a 3" scale device powered by AAA batteries that runs full Linux and supports H.264 decode animations running on a Touch-screen interface, fully custom with whatever chips/whatever you want on it. Do I know what it does yet? No. Lol, I haven’t been able to figure that out yet. But… surely this is a useful base to start thinking of ideas.

4·11 months ago

4·11 months agoSo yeah, I think that’s basically the two paths the modern hobbyist have taken. If we need “custom” electronics, its “obviously an Arduino” (or similar competitor: MSP430, ESP32, STM32F3, Teensy, etc. etc.). We prototype with DIPs and then make a cheap 2-layer board that uses the microcontroller + the custom-bit of wiring we need and call it a day. Whereas the “Computer” world is a world of Ethernet, CANbus, PCIe, SD-MMC connectors and whatever. Its about standards and getting things to easily fit into standards, and far less about actual customization.

And these two paths are separated in our brains. They’re just different worlds and we don’t think too much about it.

I wanted to talk about SiP chips but I couldn’t find the space for it. I guess where my overall point is… is that today’s entry-level MPUs can create “custom Arduino-like” designs, except with the full scale Linux / Microprocessor level of compute power (well, on the low-end at least. All of these MPUs are far slower than Rasp. Pi).

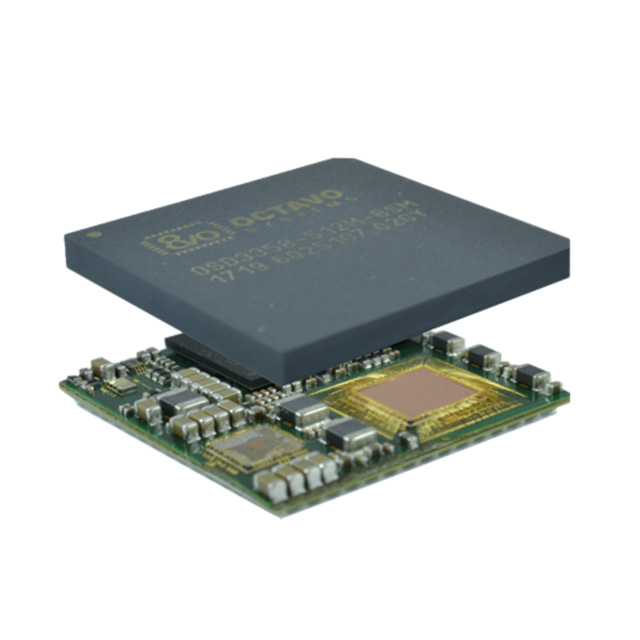

I was talking about SAM9x60 earlier, but TI’s / Octavo System’s SiP based on AM355x has a very intriguing demo that you won’t believe.

This OSD335x SiP is a BGA system-on-package, fully bootable Linux computer. Complete with DDR2 RAM, onboard Flash for a file-system, Power-controller, and yes, even a PDN/power network to ensure smooth and consistent bootups.

What’s suddenly available here, is that you can boot up a full scale Linux-box in a 20mm x 20mm size, even with some of the dumbest PCB design imaginable. The amount of sophistication that’s going into these chips today is unreal… and is making “build your own SBC from scratch” possible on a hobbyist’s budget, scale, and study.

So what today represents is: “Why not go full custom design with MPUs?” Yes, we traditionally have reached for MSP430 or ATMega328 for this problem, but maybe its not so bad to design around a more powerful system in practice. Now different manufacturers have gone with different routes here. Ti is working with Octavo Systems and pushing these SiP of course, Microchip is sticking with open-source hardware and documentation for people more comfortable with PCB design (though a SiP is also available from Microchip for on-board DDR2, but without the power-controller like Octavo System’s solution), etc. etc. All to varying levels of simplicity, configurability, and so forth.

I ended my post with the joke about drones because… that’s the most obvious initial good use of this tech I can immediately think of. Drones are size/weight constrained (the lighter you make everything, the lighter your motors can be, and the lighter your battery can be, making everything lighter again). So big heavy connectors, while good for compatibility, are a big detractor. You want to build a full custom PCB-design that removed everything unnecessary, you really want to save every gram possible and make everything as light as reasonable. If you’re just going to slap an Ethernet cord and a CANbus onto a computer, I don’t think these SiP or MPUs are really that useful (might as well buy a Rasp. Pi at that point).

But if you need a customized design with specific specs to weight and power-usage, well… custom is your way to go. And custom MSP430 (16-bit or 8-bit competitors or Cortex-M0+) leaves much to be desired from a programming perspective.

11·1 year ago

11·1 year agoThat’s not what storage engineers mean when they say “bitrot”.

“Bitrot”, in the scope of ZFS and BTFS means the situation where a hard-drive’s “0” gets randomly flipped to “1” (or vice versa) during storage. It is a well known problem and can happen within “months”. Especially as a 20-TB drive these days is a collection of 160 Trillion bits, there’s a high chance that at least some of those bits malfunction over a period of ~double-digit months.

Each problem has a solution. In this case, Bitrot is “solved” by the above procedure because:

-

Bitrot usually doesn’t happen within single-digit months. So ~6 month regular scrubs nearly guarantees that any bitrot problems you find will be limited in scope, just a few bits at the most.

-

Filesystems like ZFS or BTFS, are designed to handle many many bits of bitrot safely.

-

Scrubbing is a process where you read, and if necessary restore, any files where bitrot has been detected.

Of course, if hard drives are of noticeably worse quality than expected (ex: if you do have a large number of failures in a shorter time frame), or if you’re not using the right filesystem, or if you go too long between your checks (ex: taking 25 months to scrub for bitrot instead of just 6 months), then you might lose data. But we can only plan for the “expected” kinds of bitrot. The kinds that happen within 25 months, or 50 months, or so.

If you’ve gotten screwed by a hard drive (or SSD) that bitrots away in like 5 days or something awful (maybe someone dropped the hard drive and the head scratched a ton of the data away), then there’s nothing you can really do about that.

-

5·1 year ago

5·1 year agoIf you have a NAS, then just put iSCSI disks on the NAS, and network-share those iSCSI fake-disks to your mini-PCs.

iSCSI is “pretend to be a hard-drive over the network”. iSCSI can exist “after” ZFS or BTRFS, meaning your scrubs / scans will fix any issues. So your mini-PC can have a small C: drive, but then be configured so that iSCSI is mostly over the D: iSCSI / Network drive.

iSCSI is very low-level. Windows literally thinks its dealing with a (slow) hard drive over the network. As such, it works even in complex situations like Steam installations, albeit at slower network-speeds (it gotta talk to the NAS before the data comes in) rather than faster direct connection to hard drive (or SSD) speeds.

Bitrot is a solved problem. It is solved by using bitrot-resilient filesystems with regular scans / scrubs. You build everything on top of solved problems, so that you never have to worry about the problem ever again.

1·1 year ago

1·1 year agodeleted by creator

26·1 year ago

26·1 year agoWait, what’s wrong with issuing “ZFS Scan” every 3 to 6 months or so? If it detects bitrot, it immediately fixes it. As long as the bitrot wasn’t too much, most of your data should be fixed. EDIT: I’m a dumb-dumb. The term was “ZFS scrub”, not scan.

If you’re playing with multiple computers, “choosing” one to be a NAS and being extremely careful with its data that its storing makes sense. Regularly scanning all files and attempting repairs (which is just a few clicks with most NAS software) is incredibly easy, and probably could be automated.

7·1 year ago

7·1 year ago76F to 78F in the summer.

68F in the winter.

My Dad does 85F summer and 65F winter though. I though I was being luxurious with my settings lol.

I do run a dehumidifier in the summer and a humidifier in the winter though. Humidity control is almost more important IMO for comfort.

Honestly, Docker is solving the problems in a lot of practice.

Its kinda stupid that so many dependencies need to be kept track of that its easier to spin up a (vm-like) environment to run Linux binaries more properly. But… it does work. With a bit more spit-shine / polish, Docker is probably the way to move forward on this issue.

But Docker is just not there yet. Because Linux is Open Source, there’s no license-penalties to just carrying an entire Linux-distro with your binaries all over the place. And who cares if a binary is like 4GB? Does it work? Yes. Does it work across many versions of Linux? Yes (for… the right versions of Linux with the right compilation versions of Docker, but yes!! It works).

Get those Dockers a bit more long-term stability and compatibility, and I think we’re going somewhere with that. Hard Drives these days are $150 for 12TB and SSDs are $80 for 2TB, we can afford to store those fat binaries, as inefficient as it all feels.

I did have a throw-away line with MUSL problems, but honestly, we’ve already to incredibly fat dockers laying around everywhere. Why are the OSS guys trying to save like 100MB here and there when no one actually cares? Just run glibc, stop adding incompatibilities for honestly, tiny amounts of space savings.

I’ve begun to pay for Kagi.com

I wouldn’t say that it “blows my mind” or anything, but simply that it seems to work as expected (which is more than what I can say for Google). There’s also a “Fediverse” button on Kagi.com, so it can search lemmy.world (and more??).